Asset Inventory for Internal Network: problems with Active Scanning and advantages of Splunk. In the previous post, I was writing about Asset Inventory and Vulnerability Scanning on the Network Perimeter. Now it’s time to write about the Internal Network.

I see a typical IT-infrastructure of a large organization as monstrous favela, like Kowloon Walled City in Hong Kong. At the beginning it was probably wisely designed, but for years it was highly effected by spontaneous development processes in various projects as well as multiple acquisitions. And now very few people in the organization really understand how it all works and who owns each peace.

There is a common belief that we can use Active Network Scanning for Asset Inventory in the organization. Currently, I’m not a big fan of this approach, and I will try to explain here the disadvantages of this method and mention some alternatives.

Active Scanning

Let’s say you bought a Vulnerability Scanner (or Vulnerability Management solution). The vendor, of course, promised that it will solve all the problems with the infrastructure. And you want to use it to scan our entire organization.

Subnets

You most likely do not know about all the active hosts in the organization and want to scan by subnets. There will be questions for your IT team it and your interaction with them:

- Do you know about all the subnets that are in use in the organization?

- Is there any trusted source in which this information is updated regularly?

Even if there is an updated list of subnets, it is most likely available in not formalized form. For example, as confluence pages. How to work with them read in “Confluence REST API for reading and updating wiki pages“.

Scan Tasks

Let’s say that you know about all the subnets. Will you just create one task with all these subnets and run it? Well, most likely it will be extremely impractical and you will need to create multiple tasks in your scanner. Read how to do it in my posts for Qualys and Tenable Nessus.

Networks Settings and Firewalls

Let’s say you launched all these scans tasks and now the question is how long the scan will take and how adequate the results will be.

Are you sure that all the subnets will be accessible for the scanner host?

What if the network is configured in such way that you will see something like this:

- all IP addresses are down for some subnet

- all the IP-addresses are active for some subnet

- all ports are open for some hosts in some subnet

This is quite possible situation and you can miss it in scan results.

But on the other hand, let’s say you really have a network host that sees all the network hosts in the organization. Don’t you think that this is a very tempting goal for intruders?

Multiple scanners and appliances

Let’s say you take an advice of Vulnerability Management vendors and place a separate scanner or appliance in each subnet. Thus there will be no firewalls between the scanner and the target. This is exactly what Tenable Network Security recommends for Nessus deployment.

The scheme looks more workable. However, such a large number of scanners will be more difficult to administer and most likely this will require additional costs. And of course master and slave scanners will have to see each other and this will still require changes in the network configuration.

Approvements for the scans

Can you start the scanning process for any subnet at any time?

If we talk about the perimeter, then you are most likely can do this. In fact, the attackers will scan you constantly anyway. What difference it does if you do it yourself as well?

If we talk about internal network, vulnerability scan can have effect badly the operation of the systems. And it will be very convenient for IT to say that any problems with the service happened because of your active scanning.

To avoid this, you have to get the approvement for each scan from the system owners. And you will be lucky if you set the schedule scanning as well. But in any case it will be a lot of work to set this agreements.

If you want to scan with authentication, you will need to obtain permissions for this as well and to get credentials from the system owners.

In conclusion to active scanning

As you can see, there are many nuances. And I’m not even writing about IPv6, for which active network scanning will not work at all.

Active network scanning is an important tool, but not a solution for all problems. It can be useful to detect systems that are not described anywhere (shadow IT). But I think that this should be an additional process, not the main one.

Now I believe that we should scan actively only the hosts, that we already know about and only after the approve from the system owners.

Integrations

And where should we get information about the hosts? It’s great when such Asset Management system exists and is supported by IT. If there is no such system, we must collect information from various sources and somehow consolidate it.

Of course, it is possible to add such inforamation to some database, for example in MongoDB. But sometimes it’s much better to use enterprise-ready solution, such as Splunk. It is certainly much easier than making your own application, to think about visualization, authentication, etc.

I do not want to argue whether Splunk is a SIEM or not. For me, it is a convenient platform, in which you can regularly add various types of data, process and demonstrate it. Connectors for Splunk are really easy to write, and different employees can do it independently.

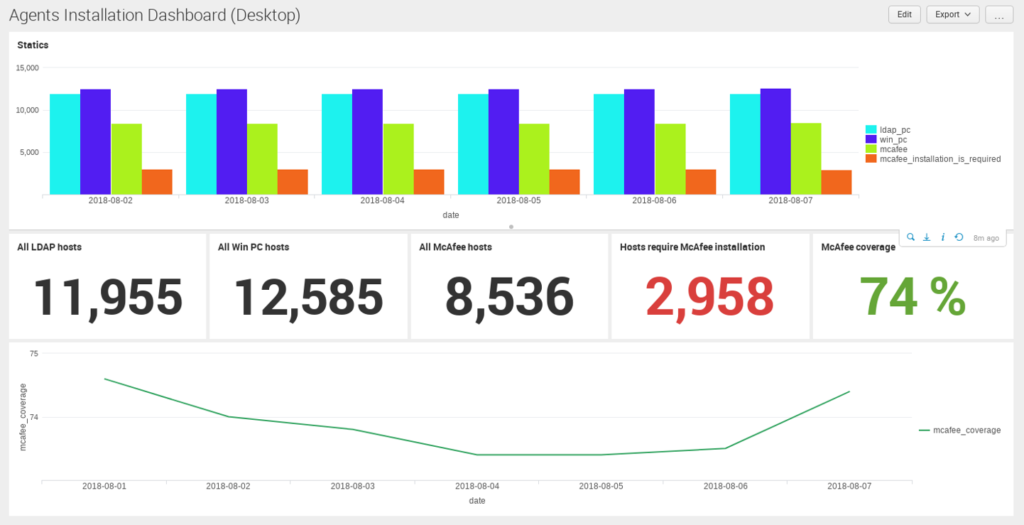

We can put there information about the hosts from LDAP, home-grown CMDBs, gather information from end-point protection agents McAfee, Trend Micro, Kaspersky, FireEye, etc. Also we can put there information about active assets from Cisco ISE and the results of active scanning from Nessus. It become very informative.

I already wrote how to do it in posts:

- Export anything to Splunk with HTTP Event Collector

- How to correlate different events in Splunk and make dashboards

- Sending FireEye HX data to Splunk

- Sending tables from Atlassian Confluence to Splunk

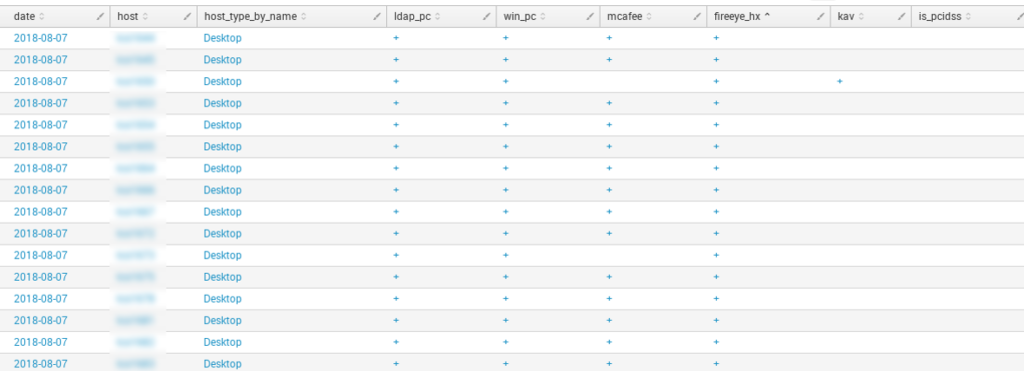

We can get a table for the network hosts and get the groups we need:

And, of course, we can display them on dashboards. Here you can see the McAfee agents coverage for Windows hosts (synthetic data):

What is great about Splunk is that these dashboards can not only be edited in graphical mode, but can also as xml file. And this means that dashboards can be generated, for example, with Python scripts.

I’m already doing it and I can say that it saves a lot of time and efforts, especially when we use similar search queries in several groups of charts, diagrams and tables. Otherwise, we would have to change each graphic element each time when the main search query is changing (for example, when we add a new data source).

Hi! My name is Alexander and I am a Vulnerability Management specialist. You can read more about me here. Currently, the best way to follow me is my Telegram channel @avleonovcom. I update it more often than this site. If you haven’t used Telegram yet, give it a try. It’s great. You can discuss my posts or ask questions at @avleonovchat.

А всех русскоязычных я приглашаю в ещё один телеграмм канал @avleonovrus, первым делом теперь пишу туда.

Pingback: CyberThursday: Asset Inventory, IT-transformation in Cisco, Pentest vs. ReadTeam | Alexander V. Leonov

Pingback: Retrieving IT Asset lists from NetBox via API | Alexander V. Leonov

Pingback: ISACA Moscow Vulnerability Management Meetup 2018 | Alexander V. Leonov

Pingback: Splunk Discovery Day Moscow 2018 | Alexander V. Leonov

Pingback: MIPT/PhysTech guest lecture: Vulnerabilities, Money and People | Alexander V. Leonov

Pingback: Why Asset Management is so important for Vulnerability Management and Infrastructure Security? | Alexander V. Leonov

Pingback: How to list, create, update and delete Grafana dashboards via API | Alexander V. Leonov

Pingback: Accelerating Splunk Dashboards with Base Searches and Saved Searches | Alexander V. Leonov