Continuing the series of posts about Kenna (“Analyzing Vulnerability Scan data“, “Connectors and REST API“) and similar services. Is it actually safe to send your vulnerability data to some external cloud service for analysis? Leakage of such information can potentially cause great damage to your organization, right?

It’s once again a problem of trust to vendor. IMHO, in some cases it may make sense to hide the real hostnames and ip-addresses of the target hosts in scan reports. So, it would be clear for analysis vendor that some critical vulnerability exists somewhere, but it would not be clear where exactly.

To do this, each hostname/ip-address should be replaced to some values of similar type and should be replaced on the same value each time. So the algorithms of Kenna-like service could work with this masked reports. This mean that we need to create a replacement dictionary.

Let’s say I have a directory with some scan results. I make a set of files and specify which characters can be used in fqdn:

import os

import re

import iptools

import json

fqdn_symbols = "[a-zA-Z0-9\.\-\_]*"

files = set()

for file in os.listdir( "scans/"):

files.add(file)

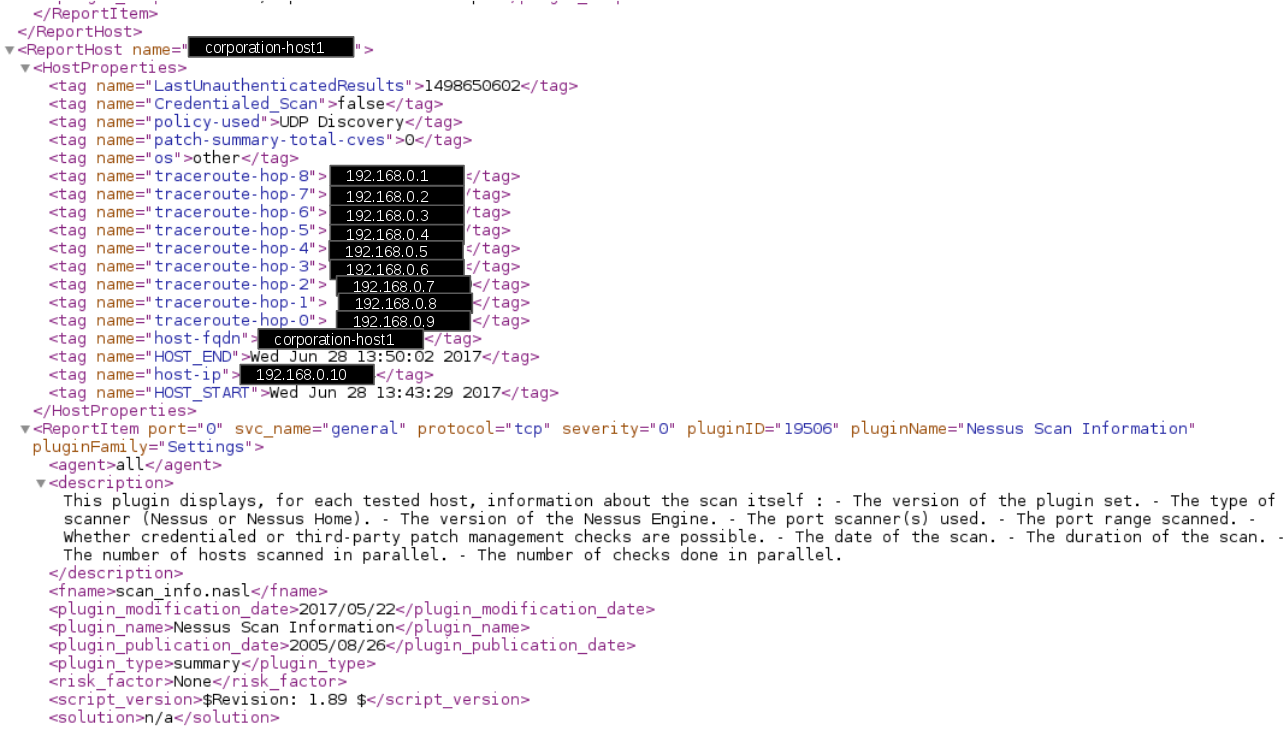

In order to make the replacement dictionary, I was analyzing Nessus report line by line (processing XML as a text is a sin, I know 😉 ). I was looking for values that are similar to ip-addresses and hostnames. Then, I selected aliases for these values: took ip-addresses from 192.168.0.1/16 and male fqdn-like values corporation-hostNUMBER. I also assumed that we use only .ru and .com domains.

ips_to_mask = set()

fqdns_to_mask = set()

for file in files:

print(file)

f = open( "scans/" + file)

for line in f.read().split("\n"):

lines = re.findall("(" + fqdn_symbols + "\.ru)", line)

if lines:

for line2 in lines:

fqdns_to_mask.add(line2)

line = line.replace(line2,"",1)

lines = re.findall("(" + fqdn_symbols + "\.com)", line)

if lines:

for line2 in lines:

fqdns_to_mask.add(line2)

line = line.replace(line2, "", 1)

ips = re.findall(r"(\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3})", line)

if ips:

for ip in ips:

ips_to_mask.add(ip)

f.close()

n = 1

replace = dict()

for ip in ips_to_mask:

p = iptools.ipv4.ip2long('192.168.0.1') + n

new_ip = iptools.ipv4.long2ip(p)

replace[ip] = str(new_ip)

n += 1

n = 1

for line in fqdns_to_mask:

replace[line] = "corporation-host" + str(n)

n += 1

replace_str = json.dumps(replace)

f = open("replace.json", "w")

f.write(replace_str)

f.close()

Then I made replacements in files:

f = open("replace.json", "r")

replace_str = f.read()

f.close()

replace = json.loads(replace_str)

ips_to_mask = set()

fqdns_to_mask = set()

n = 0

for file in files:

print(file)

content = ""

f = open( "scans/" + file)

for line in f.read().split("\n"):

lines = re.findall("(" + fqdn_symbols + "\.ru)", line)

if lines:

for line2 in lines:

line = line.replace(line2,replace[line2] + ".ru",1)

lines = re.findall("(" + fqdn_symbols + "\.com)", line)

if lines:

for line2 in lines:

line = line.replace(line2,replace[line2] + ".com",1)

ips = re.findall(r"(\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3})", line)

if ips:

for ip in ips:

line = line.replace(ip,replace[ip],1)

content += line + "\n"

a = open("test_masked_nessus_data/" + str(n) + ".xml", "w" )

a.write(content)

a.close()

f.close()

n += 1

If the external scan service finds something interesting on some masked ip-address, you can tell what ip-address it really was using replace.json dictionary. This is especially useful when you work with a service via API and can make this reverse replacement automatically.

Hi! My name is Alexander and I am a Vulnerability Management specialist. You can read more about me here. Currently, the best way to follow me is my Telegram channel @avleonovcom. I update it more often than this site. If you haven’t used Telegram yet, give it a try. It’s great. You can discuss my posts or ask questions at @avleonovchat.

А всех русскоязычных я приглашаю в ещё один телеграмм канал @avleonovrus, первым делом теперь пишу туда.