Divination with Vulnerability Database. Today I would like to write about a popular type of “security research” that really drives me crazy: when author takes public Vulnerability Base and, by analyzing it, makes different conclusions about software products or operating systems.

The latest research of such type, was recently published in CNews – a popular Russian Internet portal about IT technologies. It is titled ““The brutal reality” of Information Security market: security software leads in the number of holes“.

The article is based on Flexera/Secunia whitepaper. The main idea is that various security software products are insecure, because of amount of vulnerability IDs related to this software existing in Flexera Vulnerability Database. In fact, the whole article is just a listing of such “unsafe” products and vendors (IBM Security, AlienVault USM and OSSIM, Palo Alto, McAfee, Juniper, etc.) and the expert commentary: cybercriminals may use vulnerabilities in security products and avoid blocking their IP-address; customers should focus on the security of their proprietary code first of all, and then include security products in the protection scheme.

What can I say about these opuses of this kind?

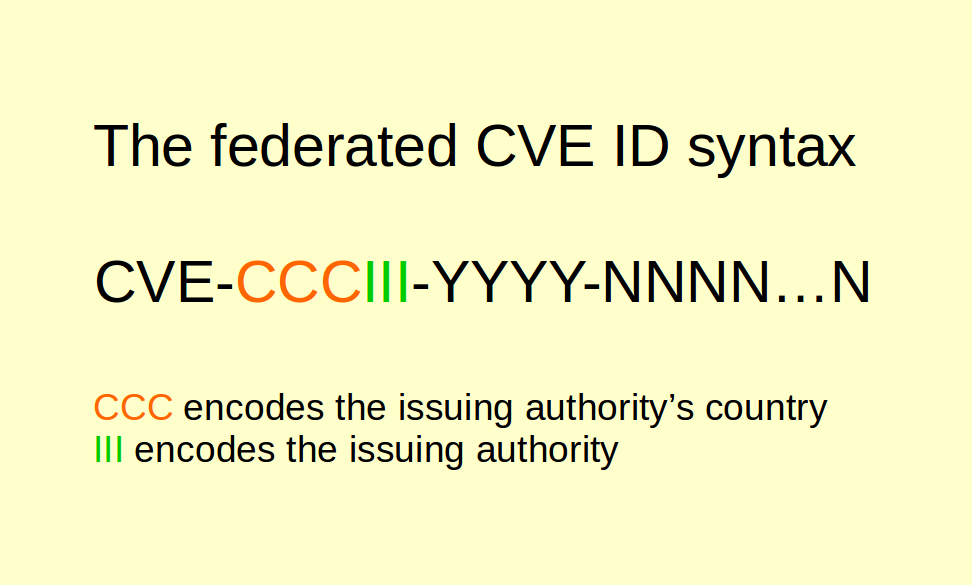

They provide “good” practices for software vendors:

- Hide information about vulnerabilities in your products

- Don’t release any security bulletins

- Don’t request CVE-numbers from MITRE for known vulnerabilities in your products

And then analysts and journalists won’t write that your product is “a leader in the number of security holes”. Profit! 😉

Continue reading →