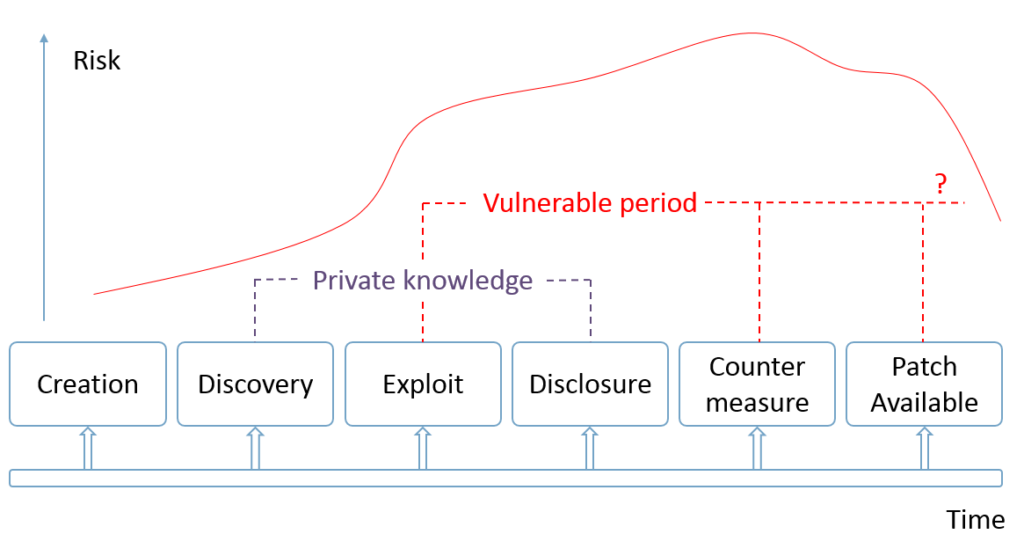

Vulnerability Life Cycle and Vulnerability Disclosures. Vulnerability Life Cycle diagram shows possible states of the vulnerability. In a previous post I suggested to treat vulnerabilities as bugs. Every known vulnerability, as same as every bug, was implemented by some software developer at some moment of time and was fixed at some moment of time later. What happens between this two events?

Right after the vulnerability was implemented in the code by some developer (creation) nobody knows about it. Well, of course, if it was done unintentionally. By the way, making backdoors look like an ordinary vulnerabilities it’s a smart way to do such things. 😉 But let’s say it WAS done unintentionally.

Time passed and some researcher found (discovery) this vulnerability and described it somehow. What’s next? It depends on who was that researcher.

Responsible disclosure

Let’s say the researcher is an employee of the Software Vendor or some White Hat hacker/bughunter. The vulnerability will be reported in detail to the Software Vendor, will be held in secrete for some time (~ 3 months, or even more), so the vendor could fix it properly. Then vendor’s Security Bulletin with the patch for this vulnerability will be released.

After that, information about this vulnerability become public. Vulnerability will get an official CVE number, CVSS score and everyone will know about it. Vulnerability management vendors will create checks to detect this vulnerability automatically, so it will be easier to find it in the infrastructure. All specific details, including PoC/exploit, will be published some time later after the patch release, to ensure that customers already updated their systems.

This is the most comfortable way for the Software Vendor and the customers. It minimize the risk that this vulnerability will be actively used by attackers before the patch is available.

But the researchers will have to wait for a long time before they can share this information and demonstrate their expertise. It can be quite annoying. Especially if they don’t get money from this, only an acknowledgment, literally “Thanks!”. It’s also a risk for them that some other researchers will find the same vulnerability and release information about it earlier, taking all the credits for this.

Full disclosure

If the researcher is looking for more fame or just angry on the Software Vendor, he can publish all the details about vulnerability and examples of exploitation as soon as he discovers the vulnerability.

And not only individuals can do the full disclosure. For example, Google’s year Project Zero published Windows 10 vulnerabilities strictly after a period of 90 days, ignoring all the protests from Microsoft.

The vulnerability without a patch is called 0day vulnerability. In the full disclosure situation there is a huge risk that this vulnerability will be actively used by attackers. This means that everybody will be in a hurry:

- Software Vendors will need to create and test the patch for the vulnerability as soon as possible

- Security Teams in the organization will try to implement workarounds

- Cyber-criminals will try to use this vulnerability in malware and perform the attacks

But it’s not the worst case…

Dark side

What if the researcher will find the vulnerability and decide NOT to disclose it? Maybe he will use it in his own attack, maybe he will sell it in Darknet. In this case the period of private knowledge and vulnerable period can be very long, months and years.

And it will become public only in these cases:

- It will be found in the process of malware analysis (like, 0days that were used in Stuxnet)

- It will be a part of data leakage (like Eternal Blue exploit leaked from the NSA).

Private exploits for 0day vulnerabilities can be very effective. The only good news is that they are also pretty rare and expensive, and can easily lost the value if the attack will be tracked. The target should be really important to risk loosing such exploits. When this finally happens, the situation will be the same as for full disclosure (see “WannaCry about Vulnerability Management“).

In a real life

In a real life all these cases are happening simultaneously. Different groups of researchers analyze the software with the different goals and results and what we see in public is just a very top of the iceberg.

Risk?

Now, a little bit about the risk on the diagram. It’s more about the common sense. Vulnerability that can be exploited by any script kiddy is much dangerous than the vulnerability that no one knows how exploit. And vulnerability that can be fixed using patch or workaround is less dangerous than the one that can be fixed only by deleting the software or disabling the service. The risk is become smaller only if there is a patch or workaround for vulnerability.

It’s worth to mention, that the risk here has some global meaning, it’s the risk for global IT infrastructure. Like the risk of disease become smaller for the whole human population if we have a vaccine and proper medicine. But for those individuals who avoid vaccination or treatment (read: don’t audit and patch the systems) risk doesn’t get smaller at all.

Hi! My name is Alexander and I am a Vulnerability Management specialist. You can read more about me here. Currently, the best way to follow me is my Telegram channel @avleonovcom. I update it more often than this site. If you haven’t used Telegram yet, give it a try. It’s great. You can discuss my posts or ask questions at @avleonovchat.

А всех русскоязычных я приглашаю в ещё один телеграмм канал @avleonovrus, первым делом теперь пишу туда.

Pingback: MIPT/PhysTech guest lecture: Vulnerabilities, Money and People | Alexander V. Leonov

Pingback: No left boundary for Vulnerability Detection | Alexander V. Leonov

Pingback: Who should protect you from Cyber Threats? | Alexander V. Leonov

>Software Vendors will need to create and test the patch for the vulnerability as soon as possible

Totally agree on this. I am concerned that the need for speed may decrease quality. Example of that is Spectre (https://en.wikipedia.org/wiki/Spectre_(security_vulnerability))

>Vulnerability that can be exploited by any script kiddy is much dangerous than the vulnerability that no one knows how exploit.

You hit the nail on the head with this one. From what I have seen, the quality of exploits are not equal. Exploit-DB is often named as an example of this. The exploits capabilities range from proof of concept to reveal the vulnerability exists to 1-click shell code access.

It’s like comparing a first down in American football to a touchdown. With the former, it’s progress, but it’s not a win by itself.

Hi Parag! Totally agree with you. We need much better verification and classification of the exploits. Currently the prioritsation is often based on the belief that the code we see at exploit-db actually works and can be used by an attacker. However, this verification of such code seems to be hard to automate. At least it should be presented in some standard form, like Metaspoit module.

Pingback: Vulnerability Management at Tinkoff Fintech School | Alexander V. Leonov