Vulnerability Intelligence based on media hype. It works? Grafana LFI and Log4j “Log4Shell” RCE. Hello everyone! In this episode, I want to talk about vulnerabilities, news and hype. The easiest way to get timely information on the most important vulnerabilities is to just read the news regularly, right? Well, I will try to reflect on this using two examples from last week.

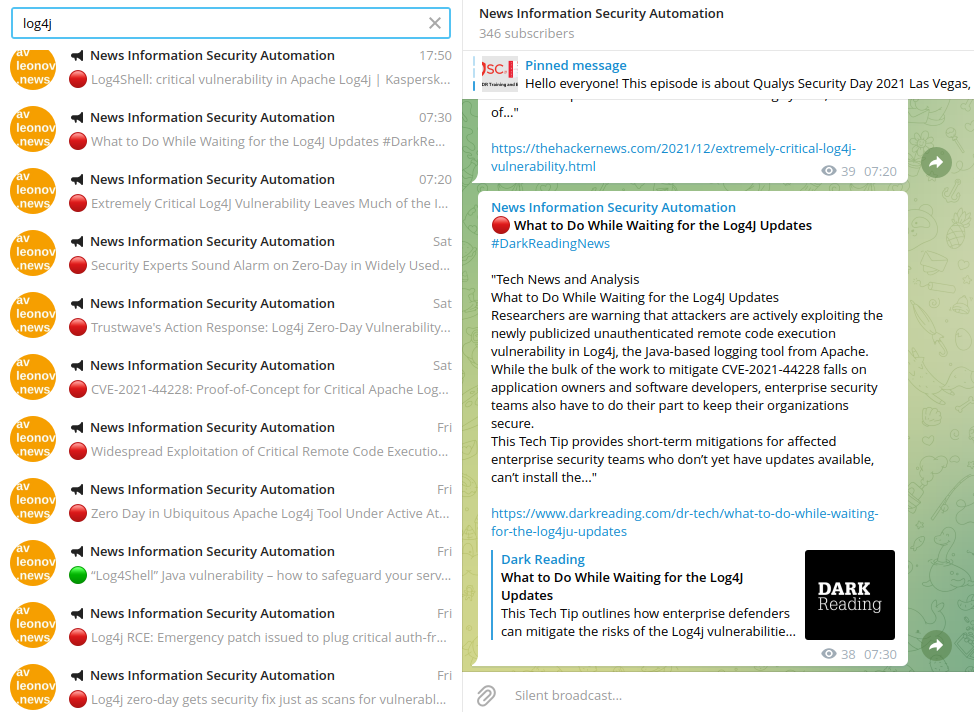

I have a security news telegram channel https://t.me/avleonovnews that is automatically updated by a script using many RSS feeds. And the script even highlights the news associated with vulnerabilities, exploits and attacks.

And last Tuesday, 07.02, a very interesting vulnerability in Grafana was released.

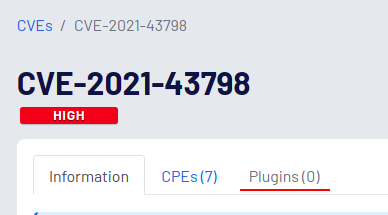

Grafana Local File Inclusion / Directory Traversal / Arbitrary File Read (CVE-2021-43798)

Grafana is an open source, multi-platform analytics and visualization web application. It provides charts, graphs, and alerts when connected to supported data sources. This application is very popular, it is used by companies almost everywhere for a variety of needs. I use it too. And video “How to list, create, update and delete Grafana dashboards via API” is most viewed on my youtube channel. Really, this is a thing that everyone needs.

Vulnerability CVE-2021-43798 makes it very easy to make requests and get the contents of files on the host. Without any authentication!

$ curl --path-as-is /public/plugins/alertlist/../../../../../../../../../../etc/passwd

In addition to passwd, it can also be /etc/hosts or /var/lib/grafana/grafana.db. Depending on the host configuration, there are many options how to use this in attacks. It is clear that such vulnerabilities need to be urgently fixed. And if you do not have a comprehensive IT Asset inventory or Vulnerability Management system, then this can be very problematic.

And just imagine, I tell my colleagues that there is such a critical vulnerability, and I have an automated security news channel, how great it is for tracking such vulnerabilities, and there should be a lot of news about Grafana in there. And I go to this channel and there is nothing at all.

It turned out that very few sites reacted to this. And my channel did not process the rss of those sites that reacted. I added sites from the References section of Qualys ThreatPROTECT post. Therefore, perhaps next time there will be no such problems.

By the way, I was unable to get the rss feed for the Qualys ThreatPROTECT blog. Link in code <link rel="alternate" type="application/rss+xml" title="Qualys ThreatPROTECT » Feed" href="https://threatprotect.qualys.com/feed/" /> leads to the landing page. If anyone from Qualys reads this, fix it plz.

Also, I want to note that there is still no detection for CVE-2021-43798 in Nessus, although it is done trivially and more than 6 days have passed. Unfortunately, you cannot rely entirely on vulnerability scanners.

But let’s look at an example where everything worked out as it should.

A critical vulnerability in Log4j was released on Friday, 10.12.

Log4j Remote Code Execution (CVE-2021-44228)

Log4j is a logging framework which is written in java. A zero-day vulnerability involving remote code execution in Log4j 2 has a name Log4Shell (CVE-2021-44228).

I will not go into the technical details that everyone already writes about. In avleonovnews there ware at least 11 mentions. For example, Tenable released a good analysis.

This is an RCE vulnerability in the library that can be easily exploited. And the problem is from two sides at once: for application security and for infrastructure security.

The first are solving problems right now. In fact, you need to understand in which applications the vulnerable log4j is used and either reconfigure or upgrade to a new version. And in the case of legacy projects, this can be extremely difficult. But first, you need to find vulnerable applications. And it can also be extremely difficult if the organization does not have sufficient control over the development and deployment processes.

The effect on infrastructure security will be more delayed. Log4j is used in a wide variety of products. The most obvious ones: Apache Druid, Apache Flink, Apache Solr, Apache Spark, Apache Struts2, Apache Tomcat. And we will learn about others with the release of new vendor bulletins. And each of these new vulnerabilities will be critical and will need to be fixed as soon as possible. Many surprises await us.

In conclusion

The Vulnerability Intelligence process is of course essential. Simply because not all vulnerabilities can be detected in a timely manner by vulnerability scanners. However, everything depends on the sources that you use. It is very likely that some vulnerabilities will pass under the radar, simply because they are not interesting to a mass audience. Therefore, monitoring news related to vulnerabilities (for example using avleonovnews) or using a specialized feed such as Qualys Threat Protection Feed is just the beginning. This will help pay attention to really massive trending things.

The next step is to add context. What is used in your particular organization. And this means that, again, an inventory is needed. You should know what hosts you have, what software are installed on them, who is responsible for them. Of course, all this should be updated automatically.

Then, looking at this list of entities, you can decide exactly how you will receive information about their vulnerabilities and see what is covered by the Vulnerability Intelligence process and what is not. And, if necessary, you can quickly formulate a list of specific tasks to fix, and not make frantic attempts to find vulnerable entities when a fix is required ASAP.

Hi! My name is Alexander and I am a Vulnerability Management specialist. You can read more about me here. Currently, the best way to follow me is my Telegram channel @avleonovcom. I update it more often than this site. If you haven’t used Telegram yet, give it a try. It’s great. You can discuss my posts or ask questions at @avleonovchat.

А всех русскоязычных я приглашаю в ещё один телеграмм канал @avleonovrus, первым делом теперь пишу туда.

Fantastic post, 100% accurate in that you should have other processes in place for detecting vulnerabilities, especially when not all platforms can detect everything in real-time.