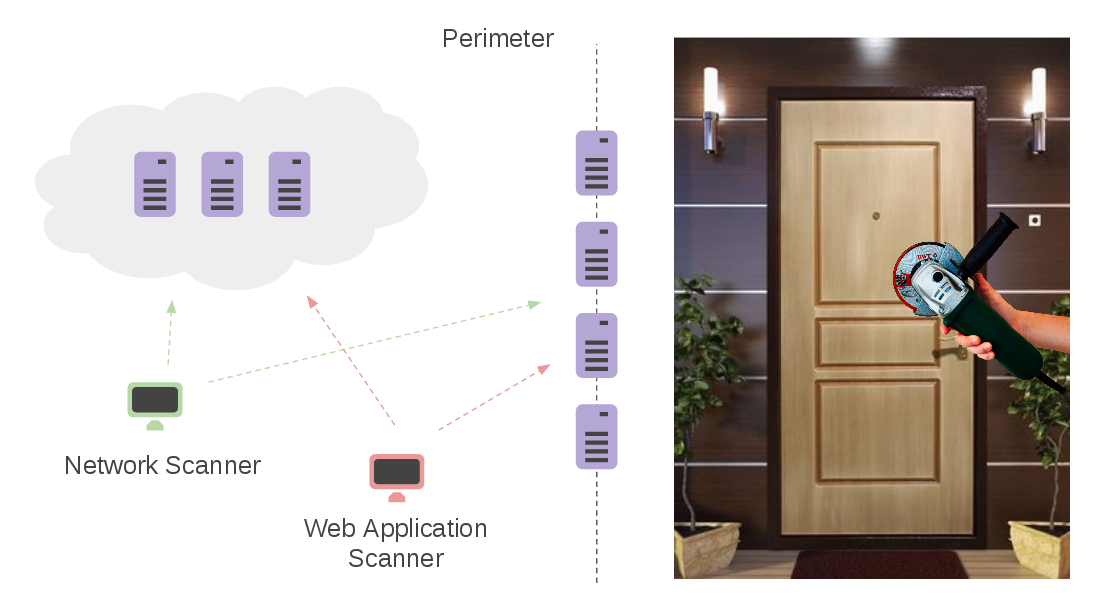

Vulnerability Management for Network Perimeter. Network Perimeter is like a door to your organization. It is accessible to everyone and vulnerability exploitation does not require any human interactions, unlike, for example, phishing attacks. Potential attacker can automate most of his actions searching for an easy target. It’s important not to be such of target. 😉

What does it mean to control the network perimeter? Well, practically this process consist of two main parts:

- Assessing network hosts that are facing Internet using some Network Scanner (Nessus, OpenVAS, Qualys, MaxPatrol. F-Secure Radar, etc.)

- Assessing application servers, e.g. Web Servers, on these hosts using some special tools, e.g. Web Application Scanners (Acunetix, Burp Suite, Qualys WAS, Tenable.io WAS, High-Tech Bridge ImmuniWeb, etc.)

Active scanning is a good method of perimeter assessment. Dynamics of the assets is relatively low, comparing with the Office Network. Perimeter hosts usually stays active all the time, including the time when you are going to scan scanning them. 😉

Most of the dangerous vulnerabilities can be detected without authorization: problems with encryption (OpenSSL Heartbleed, Poodle, etc.). RCE and DoS of web servers and frameworks (Apache Struts and Equifax case)

The best results can be achieved with scanners deployed outside of your network. Thus, you will see your Network Perimeter the same way a potential attacker sees it. But certainly, you will be in a better position:

- You can ask your IT administrators to add your network and WAS scanners in white list, so they will not be banned.

- You can check and correlate scan results of remote scanner with (authenticated?) scan results produced by the scanner deployed in your organization’s network and thus filtering false positives.

What about the targets for scanning? How should you get them?

Getting target lists for Network Scanning

Well, it deeply depends on your concrete organization. IMHO, you should use in scan target all the public IP addresses and all the domains related to your organization.

Some potential sources:

- Ranges of IP addresses related to IANA ASN of an organization

- Corporate Wiki, e.g. Atlassian Confluence

- Corporate Task Tracker, e.g. Atlassian JIRA

- IT monitoring, e.g. Nagios

- WAF/AntiDDoS systems, e.g. Akamai

- SIEM, e.g. Splunk

- CMDB

The best source for domains is your external DNS server. You should check that all of your domains are pointed to the known IP addresses of your organization. If it is not that may mean that:

- Some domain is linked to some third party cloud services (Email Delivery Service, CRM, etc.) and you shouldn’t scan it.

- You just don’t know about all the hostings that your company uses (shadow IT infrastructure?). It”s a good reason to investigate this.

Getting target lists for Web Application Scanning

How should you get the target for WAS Scanning:

- You can scan all the domains exported from the DNS Server. But what about the hosts without a domain name, but with active Web Server?

- Some of my colleagues make active scans using nmap or masscan and the targets for Network Perimeter Scan, looking for opened 443 or 80 tcp ports. But what about the web servers using on other ports?

I prefer an easy way, I get necessary hosts and ports from the main Network Perimeter Scan performed with a fully-functional Network Scanner. For example, in case of Nessus, this might be plugins that have “Web Server” or “HTTP Server” in their name:

- Web Server HTTP Header Internal IP Disclosure

- Web Server No 404 Error Code Check

- HTTP Server Type and Version

- Web Server robots.txt Information Disclosure

- Apache HTTP Server httpOnly Cookie Information Disclosure

Doubles and Dedublication

If you use all IPs and all the domains as a scan targets for network scan you will scan the the same host several times. For example, these can be the same host:

- 12.12.234.15

- www.corporation.com

- corporation.com

- additionaldomainforcorporation.com

I do not see this as a big problem in network scans. Unauthenticated scanning of any host takes only a few minutes, even if all TCP ports and the most commonly used UDP ports are checked. Thus, I do not see the need for optimization, while you can still scan the entire perimeter on a daily basis.

However, the Web Application scanning is different. Single WAS scan can last for hours. That’s normal. Here you will need deduplication.

Deduplicating targets for Web Application Scanning

How can you make sure that 12.12.234.15, www.corporation.com, corporation.com, additionaldomainforcorporation.com are actually the same site? You can do it by requesting main page and searching for patterns for every web project that your organization uses.

You can make a remote requests by deploying own remote proxy server (e.g. Squid).

I will not place here the whole configuration guide. Only some commands for installation and basic set up:

For Ubuntu Linux:

$ sudo apt-get install squid3 $ sudo vim /etc/squid3/squid.conf $ sudo htpasswd -c /etc/squid3/passwords user Password123 $ sudo systemctl restart squid3

And here is the example of Squid config file:

auth_param basic program /usr/lib/squid3/basic_ncsa_auth /etc/squid3/passwords auth_param basic realm proxy acl authenticated proxy_auth REQUIRED http_access allow authenticated acl SSL_ports port 443 acl Safe_ports port 80 # http acl Safe_ports port 21 # ftp acl Safe_ports port 443 # https acl Safe_ports port 70 # gopher acl Safe_ports port 210 # wais acl Safe_ports port 1025-65535 # unregistered ports acl Safe_ports port 280 # http-mgmt acl Safe_ports port 488 # gss-http acl Safe_ports port 591 # filemaker acl Safe_ports port 777 # multiling http acl CONNECT method CONNECT acl lan src 192.168.56.1-192.168.56.255 # allow connections from this IPs only http_access deny !Safe_ports http_access deny CONNECT !SSL_ports http_access allow localhost manager http_access deny manager http_access allow localhost http_access allow lan http_access deny all http_port 3128 # proxy port

upd. For CentOS7:

sudo yum install squid httpd-tools sudo vim /etc/squid/squid.conf

Added to file /etc/squid/squid.conf

auth_param basic program /usr/lib64/squid/basic_ncsa_auth /etc/squid/passwords auth_param basic realm proxy acl authenticated proxy_auth REQUIRED http_access allow authenticated acl lan src 192.168.56.1-192.168.56.255 # allow connections from this IPs only

And edited this value in file /etc/squid/squid.conf

http_port 4356

Setting password and restart service:

$ sudo htpasswd -c /etc/squid/passwords user Password123 $ sudo service squid restart

Make requests from python this way:

import requests

proxies = {'http': 'http://user:Password123@12.12.234.11:3128/',

'https': 'https://user:Password123@12.12.234.11:3128/'}

response = requests.get(url, proxies=proxies, verify=False)

print(response.text)

In conclusion

The main ideas that I would like to express in this post:

- Scanning Network Perimeter is important and it is very different from scanning Office.

- Network and scanning Production / Critical Assets withing your infrastructure.

- You will need to keep list of scan targets up to date. Getting and correlating data from different systems storing data about your assets will require some scripting skills.

- You will probably need scripting skills to manage scan tasks and analyze scan results.

- Vulnerability Management vendors are awesome. Doing vulnerability assessment without scanning tools is nearly impossible.

Hi! My name is Alexander and I am a Vulnerability Management specialist. You can read more about me here. Currently, the best way to follow me is my Telegram channel @avleonovcom. I update it more often than this site. If you haven’t used Telegram yet, give it a try. It’s great. You can discuss my posts or ask questions at @avleonovchat.

А всех русскоязычных я приглашаю в ещё один телеграмм канал @avleonovrus, первым делом теперь пишу туда.

Pingback: ISACA Moscow Vulnerability Management Meetup 2017 | Alexander V. Leonov

Great post!

How do you deal with perimeter scans when the only IP your company has is a reverse proxy, which will direct requests to different DMZ servers according to the virtual host you’re using to get there?

Just scanning the IP won’t do much good…

Hi, Jay!

Thanks for the comment and your kind words! Yes, you are right. Nessus don’t work right with such kind of hosts. Assessment of network perimeter is only a part of the process. It’s important to scan infrastructure from the inside as well. And actually it’s a big luck when the company has such a small and controllable network perimeter 😉

Hello Aleksandr,

I use other solution for control Network perimeter.

firstly,

My SIEM system monitors the input/output mirror’s network traffic in realime.

And we has rules for it (control critical data, PIN….).

Secondly,

Scanning network assets;

analyze log files.

Hi Andrei!

Thanks for comment! It’s a really great to control network traffic, logs and make active scanning in one process. Basically, it is what Tenable promote with their Tenable SecurityCenter. =)

Pingback: New Nessus 7 Professional and the end of cost-effective Vulnerability Management (as we knew it) | Alexander V. Leonov

Pingback: Vulners.com vulnerability detection plugins for Burp Suite and Google Chrome | Alexander V. Leonov

Pingback: Non-reliable Nessus scan results | Alexander V. Leonov

Pingback: CyberCentral Summit 2018 in Prague | Alexander V. Leonov

Pingback: Free High-Tech Bridge ImmuniWeb Application Discovery service | Alexander V. Leonov

Pingback: Asset Inventory for Network Perimeter: from Declarations to Active Scanning | Alexander V. Leonov