Here I combined two posts [1.2] from my telegram channel about comparisons of Vulnerability Management products that were recently published in October 2019. One of them was more marketing, published by Forrester, the other was more technical and published by Principled Technologies.

I had some questions for both of them. It’s also great that the Forrester report made Qualys, Tenable and Rapid7 leaders and Principled Technologies reviewed the Knowledge Bases of the same three vendors.

Let’s start with Forrester.

Forrester Wave “Vulnerability Risk Management”

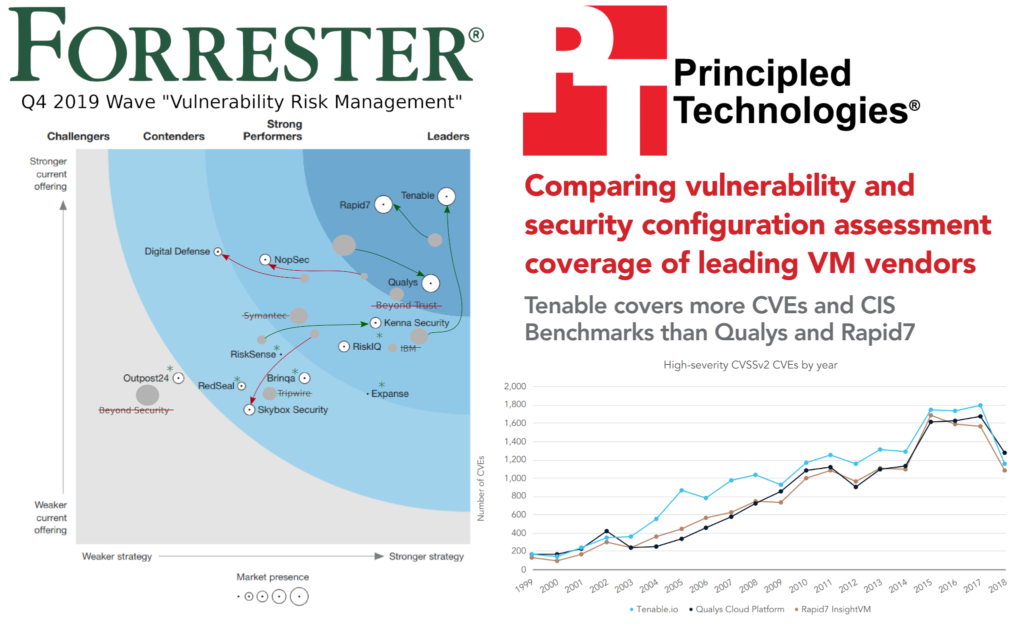

It’s becoming a good tradition to share my impressions about the Forrester Wave “Vulnerability Risk Management” report (here is one for Q1 2018). ? You can download a free reprint for Q4 2019 from Tenable website. This time I even edited the illustration a bit.

I tried to show how the positions of vendors changed, which were added or removed. Please note that this is not official, it’s just an extra layer that I added for fun.

What I liked

The report has become much more adequate than last year. Traditional VM Big Three (Qualys, Tenable and Rapid7) are leaders. ?? Stagnant VM vendors have been pessimized or completely removed from the report. This is probably due to new and more adequate inclusion criteria: “product improvements over the last two years”, “annual product revenue greater than $10 million”, “VRM product was responsible for over 50% of their total revenue”, “at least 100 enterprise customers”, etc.

What I did NOT like

This hasn’t changed much since last year:

- The main slogan of this report is “Prioritization And Reporting Are Key Differentiators”. According to Forrester, Risk Prioritization is based on measuring vulnerabilities, assets and network segments. Well, I agree that Risk Prioritization is important. BUT (!) only when your Vulnerability Detection is perfect. This is clearly not the case at the moment! For proper Risk Prioritization it’s necessary to understand the limitations of Vulnerability Scanners and how to obtain data for Asset and Network classification. Unfortunately, this report doesn’t pay much attention to core functionality of VM products, it focuses on GUI, reports and high-level marketing features. The “vulnerability enumeration” is only 15% of overall weighting. It’s really sad. ?

- Profile descriptions are based on marketing materials from vendors (BTW, such extract might be quite useful), and on some user quotes. These users also write about the reports and prioritization, like “custom reporting on individual business units was cumbersome” and “customers appreciate the new UI and strong reporting capabilities”. It seems these users don’t have (don’t see/don’t want to discuss) other problems.

- Forrester mixes products that actually scan the network hosts with the products that only analyze imported data, perimeter-only services (why noto add over9000 ASV scanners than?) and scan services with “dedicated security specialist”. The authors even write several times that some products “cannot be treated as a proper vulnerability management tool”, so why do you include them in the report? ?

In any case, the report was better than last year. ? I hope Forrester will make separate reports for the tools that actually detect vulnerabilities and tools that only aggregate&prioritize the vulnerabilities. It will also be great to change inclusion criteria and add smaller and more local VM vendors.

Now let’s look at something more technical.

Principled Technologies “Comparing vulnerability and security configuration assessment coverage of leading VM vendors”

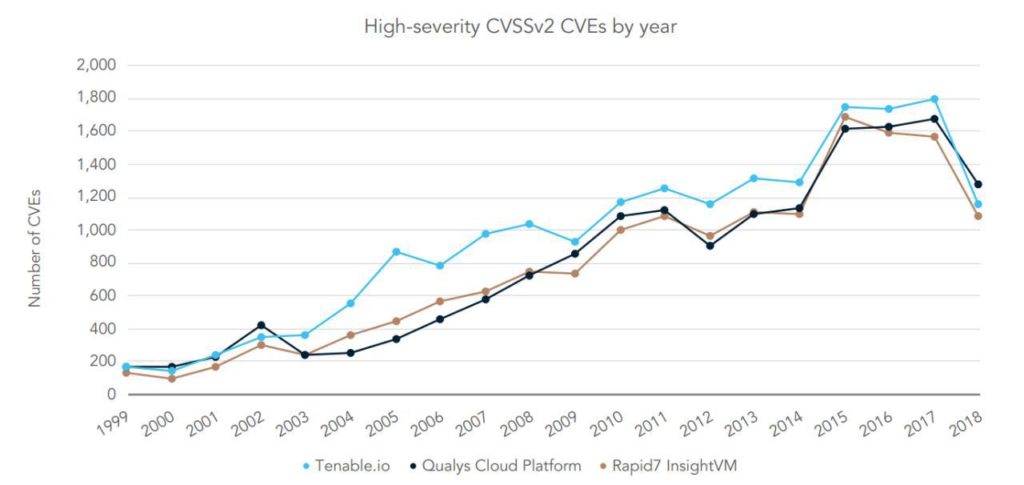

You can get a free reprint of this 14-page report at Tenable website. Tenable marketing team actively shared it. This is not surprising, because the main idea of the report: Tenable covers more CVEs and CIS benchmarks than Qualys and Rapid7.

So, my impressions:

- Hallelujah! Finally, a comparison of Vulnerability Management products based on something measurable – on their Knowledge Bases. And at least one VM vendor is not afraid to use it in marketing and mentions the competitors directly. This is a huge step forward and I hope that this is the beginning of something more serious. We really need to start talking more about the core functionality of VM products.

- However, this particular report is just a Tenable advertisement. This is not even hidden. I really like Nessus and Tenable, and believe that they have a very good Knowledge Base, but reading on every page how great Tenable’s products are is just ridiculous. It would be much better to read it in more neutral form.

- Using only the CVE IDs for comparing Vulnerability Knowledge Bases is NOT correct (strictly speaking), because for many software products most of vulnerabilities do not have CVEs, only the patch IDs. CVE-based comparison also doesn’t distinguish types of vulnerability checks: remote banner-based, remote exploit-based and local. To make a reliable comparison, it’s necessary to map all existing vulnerability detection plugins of VM products, but this is MUCH more difficult.

- CVE-based comparison in this report is not really informative. They only compare absolute numbers of IDs grouping them by year, software product (cpe) and cvss v2 score. Why is this wrong? If VM vendor A covers 1000 CVEs and vendor B covers 1000 CVEs, this does not mean that they have the same database and it is quite complete. The real intersection between the KBs may be only 500 IDs, so these vendors would be able to detect only a half of each other’s vulnerabilities. It matters, right? In my old express comparison of Nessus and OpenVAS Knowledge Bases I demonstrated this and tried to suggest reasons why some vulnerabilities are covered by some vendor and others are not. If you compare CVEs as sets of objects, it turns out that each VM product has own advantages and disadvantages.

- CIS-based comparison in this report uses only information about certificated implementations from the CIS website without regard to versions and levels. CIS Certification is an expensive and complicated procedure, that is NOT mandatory and does not affect anything. I once implemented many CIS standards for Linux/Unix in PT Maxpatrol. Well, yes, they are not certified and you won’t see them on the CIS website, but does this mean that they are not supported in the VM/CM product? Of course not! So, it’s a very strange way of comparing Compliance Management capabilities.

In conclusion, the idea behind this report is good, but the implementation is rather disappointing. If one of the VM vendors, researchers or customers wants to make similar comparison, public or private, but in a much more reliable and fair way – contact me, I will be glad to take part in this. ?

Hi! My name is Alexander and I am a Vulnerability Management specialist. You can read more about me here. Currently, the best way to follow me is my Telegram channel @avleonovcom. I update it more often than this site. If you haven’t used Telegram yet, give it a try. It’s great. You can discuss my posts or ask questions at @avleonovchat.

А всех русскоязычных я приглашаю в ещё один телеграмм канал @avleonovrus, первым делом теперь пишу туда.