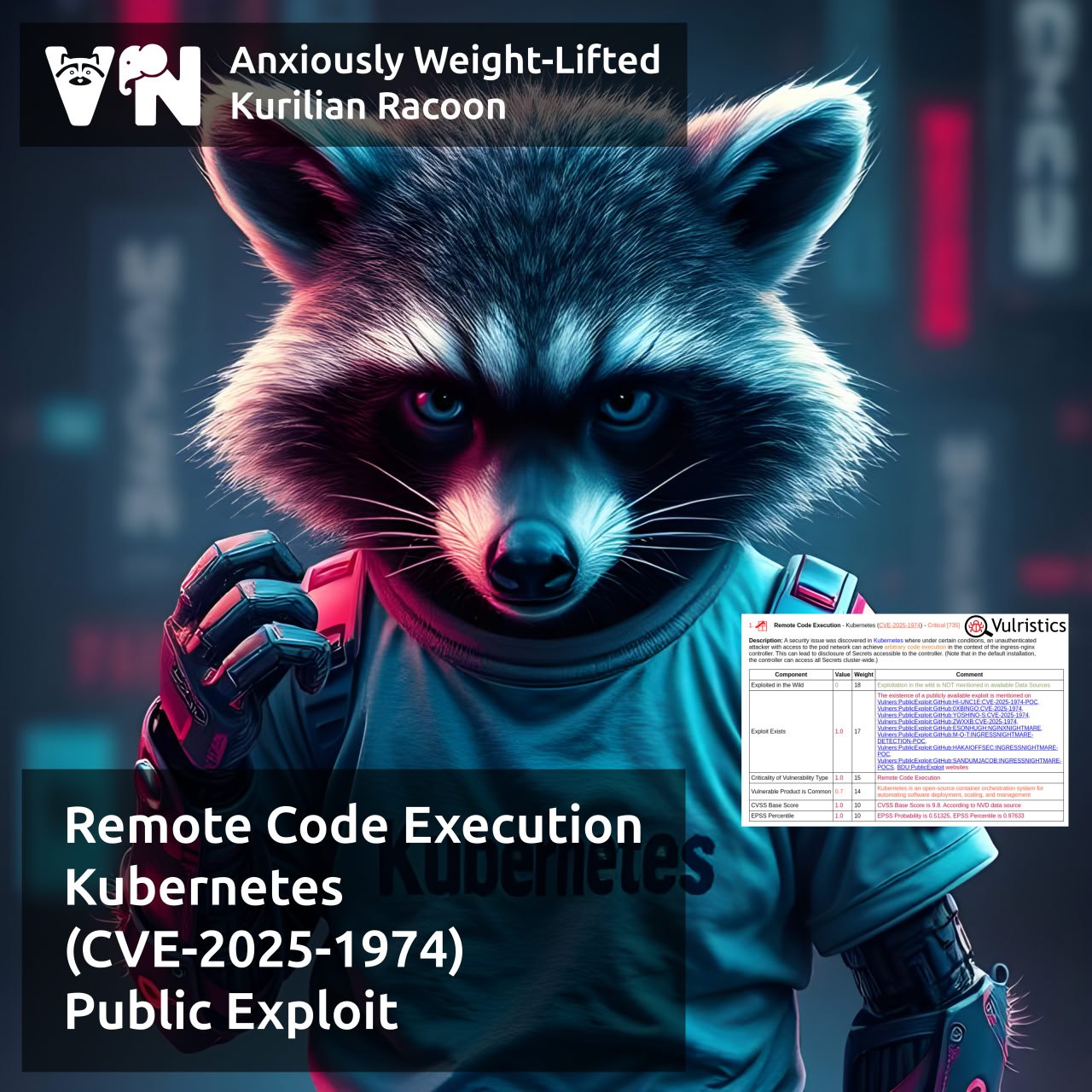

About Remote Code Execution – Kubernetes (CVE-2025-1974) vulnerability. An unauthenticated attacker with access to the pod network can achieve arbitrary code execution in the context of the ingress-nginx controller. This can lead to disclosure of Secrets accessible to the controller. In the default installation, the controller can access all Secrets cluster-wide.

🔹 On March 24, Wiz published a write-up on this vulnerability, naming it IngressNightmare (alongside CVE-2025-1097, CVE-2025-1098, and CVE-2025-24514). Wiz researchers identified 6,500 vulnerable controllers exposed to the Internet. 😱 The Kubernetes blog reports that in many common scenarios, the Pod network is accessible to all workloads in the cloud VPC, or even anyone connected to the corporate network. Ingress-nginx is used in 40% of Kubernetes clusters.

🔹 Public exploits are available on GitHub since March 25th. 😈

Update ingress-nginx to versions v1.12.1, v1.11.5, or higher!