Vulristics: Beyond Microsoft Patch Tuesdays, Analyzing Arbitrary CVEs. Hello everyone! In this episode I would like to share an update for my Vulristics project.

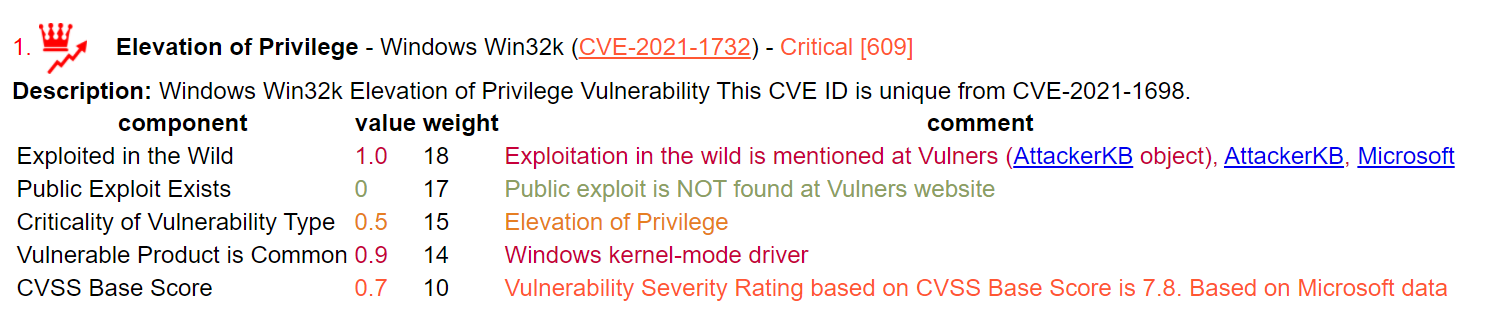

For those who don’t know, in this project I am working on an alternative vulnerability scoring based on publicly available data to highlight vulnerabilities that need to be fixed as soon as possible. Roughly speaking, this is something like Tenable VPR, but more transparent and even open source. Currently it works with much less data sources. It mainly depends on the type of vulnerability, the prevalence of vulnerable software, public exploits and exploitation in the wild.

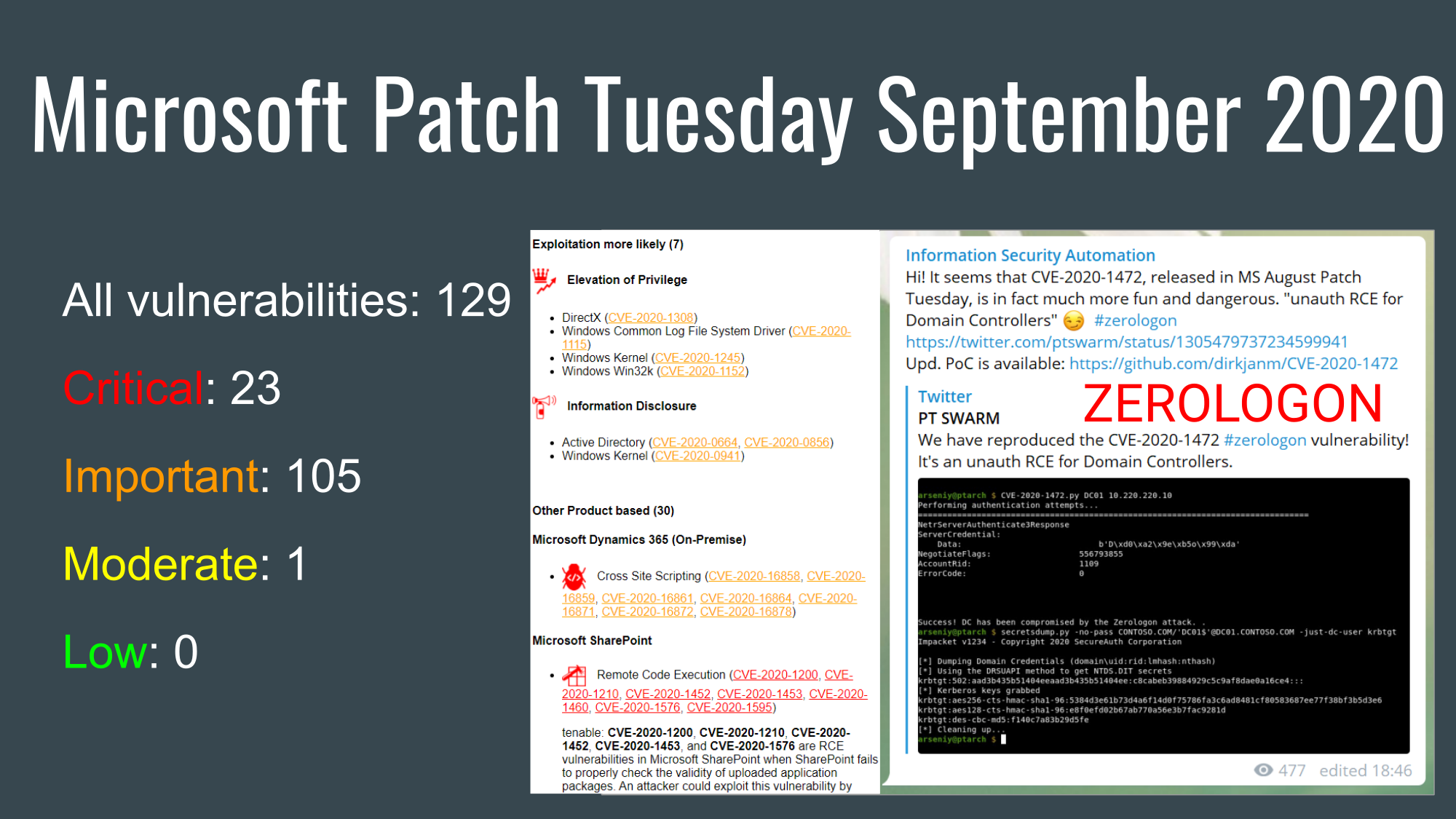

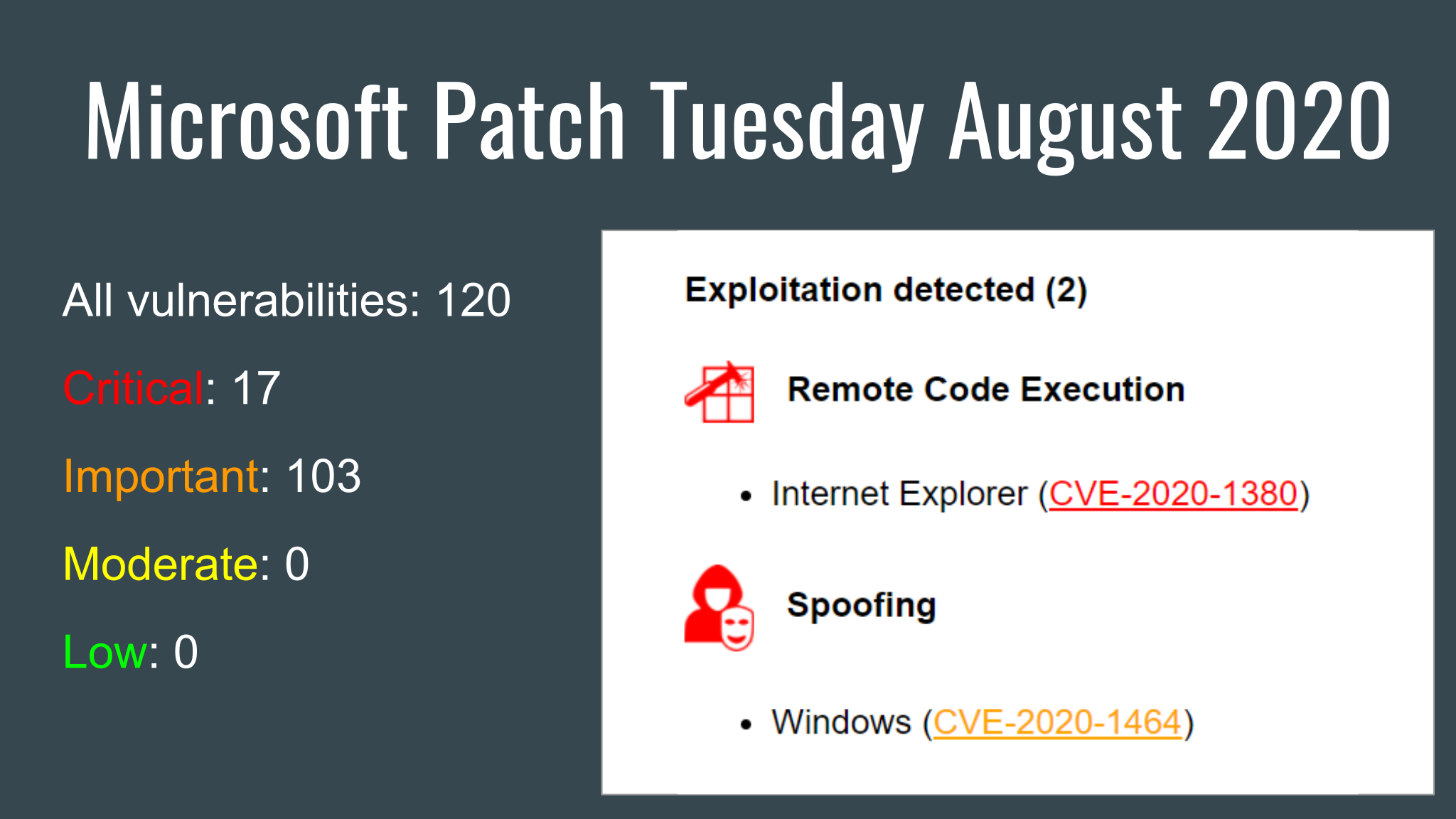

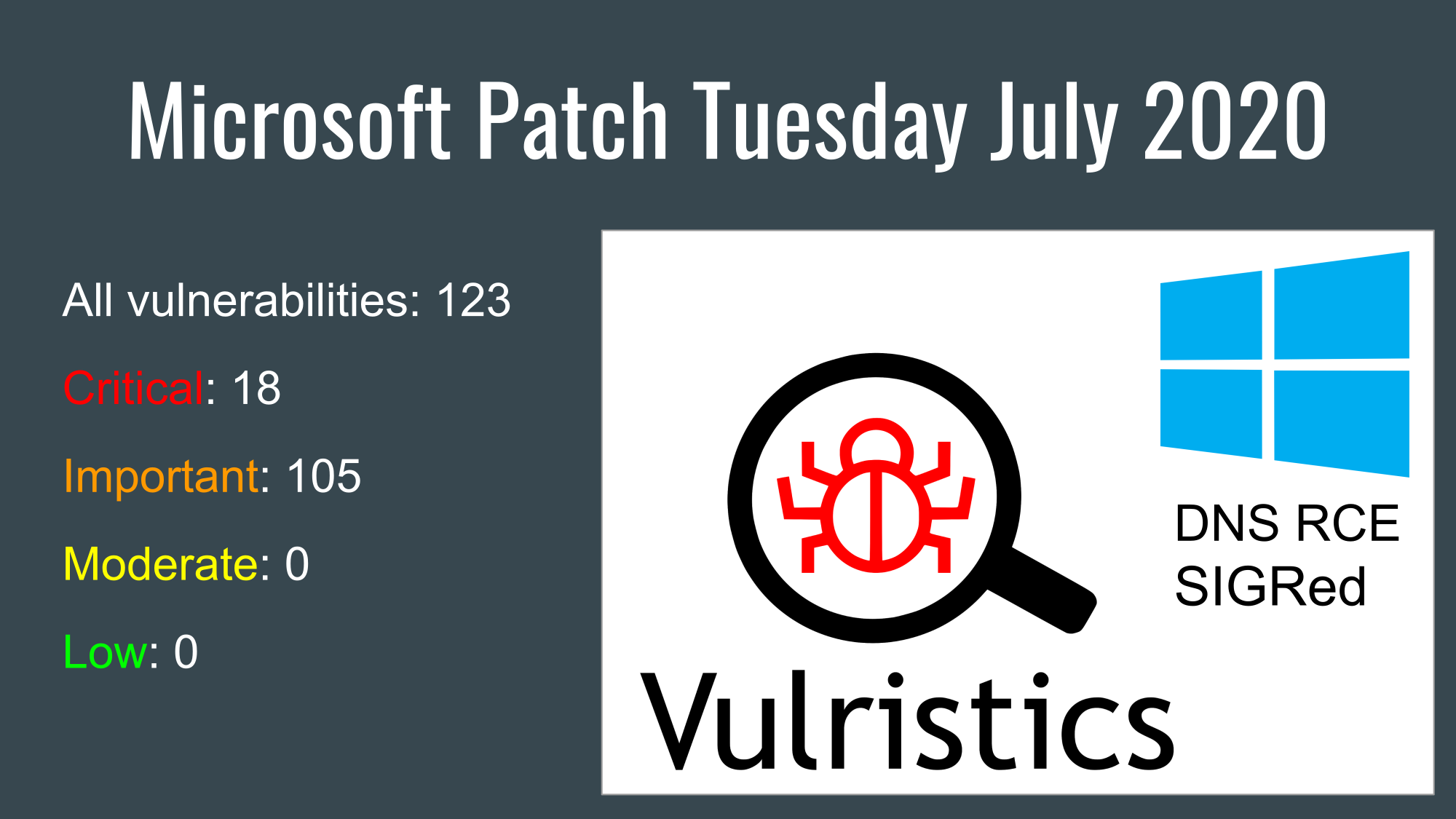

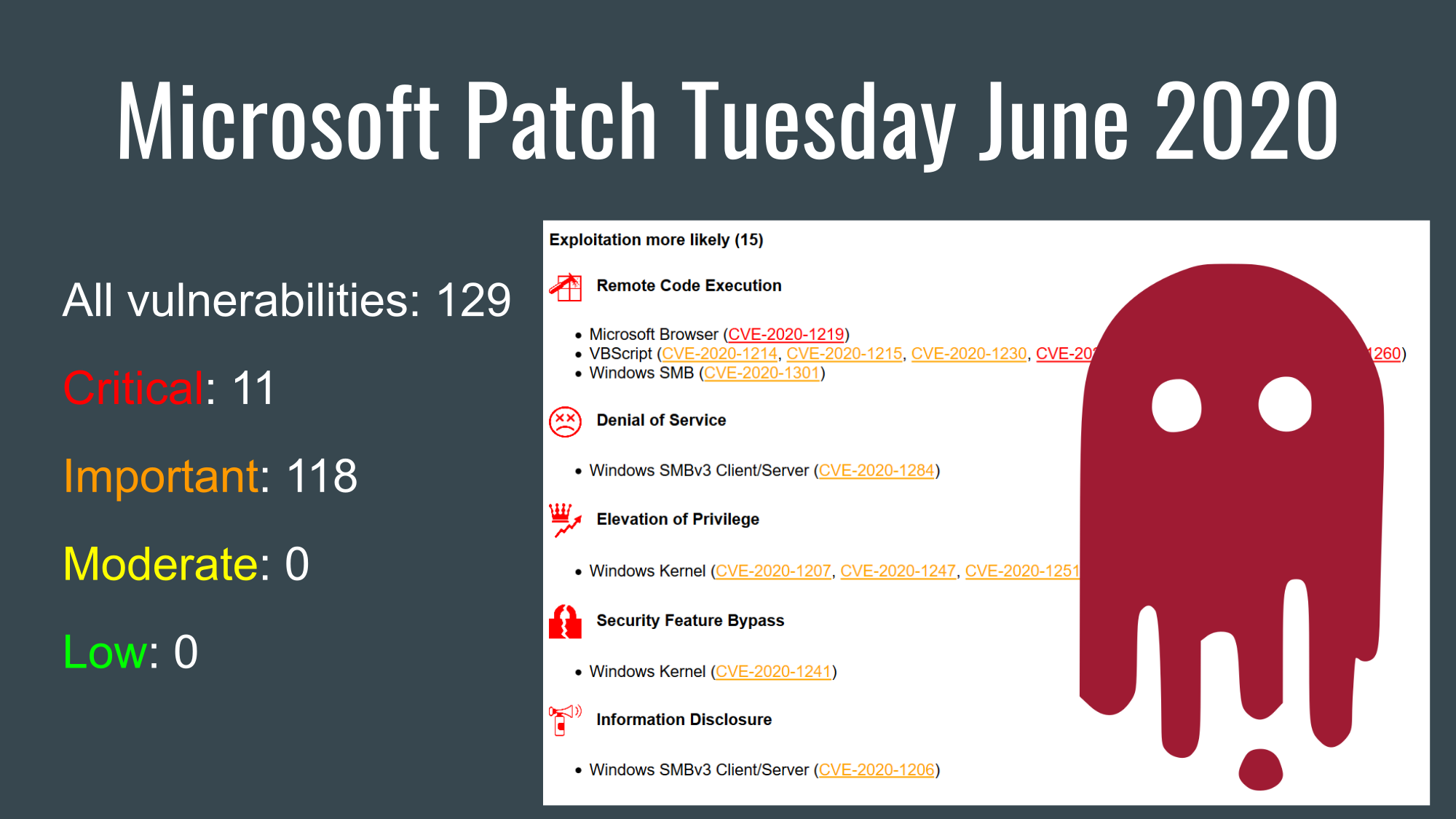

I started with Microsoft PatchTuesday Vulnerabilities because Microsoft provides much better data than other vendors. They have the type of vulnerability and the name of the vulnerable software in the title.

But it’s time to go further and now you can use Vulristics to analyze any set of CVEs. I changed the scirpts that were closely related to the Microsoft datasource and added new features to get the type of vulnerability and name of the software from the CVE description.

![Elevation of Privilege - Sudo (CVE-2021-3156) - High [595]](https://avleonov.com/wp-content/uploads/2021/03/image-2.png)